Quantified Health

The breakthrough of analytics is largely dominant in business, generating efficiencies, diminishing mundanity, and driving growth. However, it’s impact stretches beyond the commerce industries, healthcare is starting to benefit greatly from its ability to more quickly detect and therefore treat a broad range of healthcare issues. In this blog we will look specifically at how it can stem the numbers of people suffering from some form of visual impairment.

Health and wellbeing continue to dictate many of the challenges in the world of today and the expenditure of governments to do something about it.

In 2018, total UK healthcare expenditure accounted for 10.0% of its GDP. In 2019/20, the government spent £135 billion on the NHS alone (excluding the coronavirus). More than ever, it is critical that innovative technologies find their way quickly into the NHS.

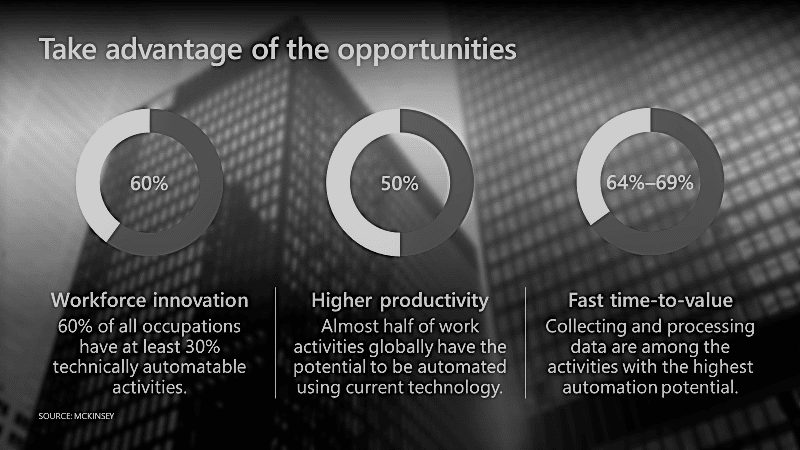

Big data in healthcare is gaining traction. Research publications alone have risen from 50 in 2018 to 350 in 2020 illustrating the cultural shift of data utilisation in healthcare. ‘Quantified health’ is a relatively new phrase that means the integration of data directly from consumer wearables (pedometers, Fitbits, Muse headbands, etc.), blood pressure cuffs, glucometers, and scales into EMRs (Electronic Medical Records) through smartphones (e.g., Apple Watch, Google Fit, and Samsung Health). They can pick up on warning signs faster by tracking changes in behaviour and other key data points. The net net is a more health aware population and a reduction in healthcare costs.

Blindness epidemic

Beyond fitness, other significant health issues currently being addressed by the world’s data scientists include visual impairment.

According to the World Health Organization, we’re on the verge of a ‘blindness epidemic’. Visual impairments and refractive errors have become a global health issue. An estimated 1.89 billion people are currently living with some form of visual impairment, this is expected to rise to 5 billion by 2050.

The good news is that there are technologies which can help mitigate the impact of visual impairment. Seeing AI, an app developed by Microsoft AI & Research can help. It narrates the world for blind and low-vision users, allowing them to use their smartphones to identify everything from an object, the contents in their wallet or even a document.

However, catching the condition early is key. Recent medical data indicates that nearly 70% of blindness cases could have been prevented with early detection and screening.

Weather Health Forecast

Diabetic retinopathy is a complication of diabetes, caused by high blood sugar levels damaging the light-sensitive layer of tissue at the back of the eye, known as the retina. When people with diabetes visit their general practitioner, they’re often referred to an ophthalmologist (an eye doctor) who can assess their eyes for signs of diabetic retinopathy. It is one of the leading causes of blindness, resulting in up to 1700 cases each year in adults in the UK.

The disease however is manageable. If detected when a patient is asymptomatic, the more severe outcomes can be avoided. Still, early diagnosis has proven difficult, with Michael Abràmoff, a retinal specialist and computer scientist stating, “we know so well how to treat it, but we simply don’t catch it early enough”. Only half of the population with diabetes get their eyes examined every year, as recommended. But assessing the 4 million people affected by diabetes in the UK and the 400 million + worldwide, is a massive challenge.

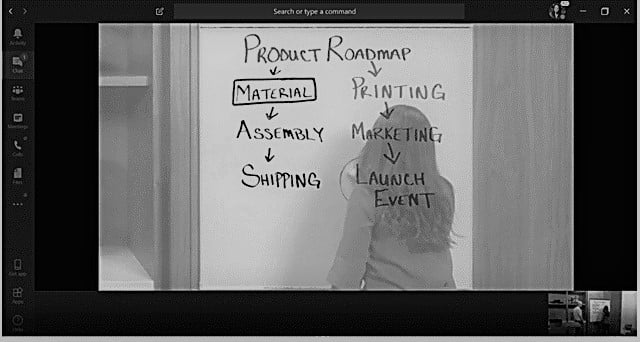

American Digital Diagnostics (formerly known as IDx) became the first company to ever receive clearance for an AI analytics platform that makes a diagnosis without physician input. The platform, called IDx-DR, uses Deep Neural Networks (DNN) to detect diabetic retinopathy in primary care. It takes images of the back of the eye, analyses them, and provides a diagnosis — referring the patient to a specialist for treatment if a case which is more than mild is detected. Using the masses of data, the AI system can identify serious cases of the condition, without the need for a clinician.

In effect, this AI technique essentially identifies the problem before it even happens. Its predictive capabilities determine a patient’s future health condition, allowing healthcare professionals to develop treatment plans to prevent visual impairment altogether. IDx-DR’s quick and easy implementation with a three-minute scan, followed by a two-minute computer-aided diagnosis allows a patient to visualise their health forecast in just five minutes.

Conclusion

Visual impairment is a significant threat to our population. Ophthalmologists have widely agreed that it is best to diagnose diabetic retinopathy before symptoms are evident. The development of the IDx-DR as an application of AI has promoted potential integration of large-scale forecasting of serious ailment progression. Healthcare forecasting may just become as common as looking up the weather.

References

https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment

https://www.lasereyesurgeryhub.co.uk/data/visual-impairment-blindness-data-statistics/

https://news.microsoft.com/on-the-issues/2019/08/08/smart-tech-blind-low-vision/

https://docs.microsoft.com/en-us/archive/blogs/msind/mine-ai-network-for-eyecare

https://www.nature.com/articles/d41586-019-01111-y

https://visionaware.org/everyday-living/helpful-products/using-apps/seeing-ai-app/

https://thenextweb.com/plugged/2020/03/09/googles-ai-powered-smart-glasses-help-the-blind-to-see/

https://www.springboard.com/blog/data-science-in-healthcare/

https://journalofbigdata.springeropen.com/articles/10.1186/s40537-019-0217-0